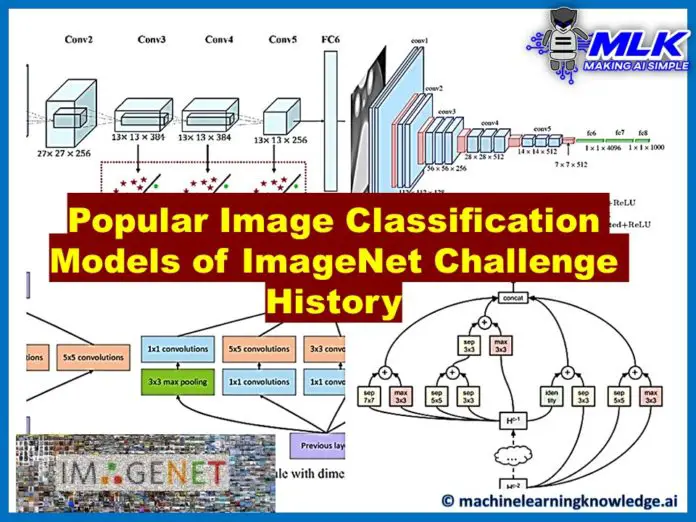

7 Popular Image Classification Models in ImageNet Challenge (ILSVRC) Competition History

Contents [hide]

- 1 Introduction

- 2 What is ImageNet ?

- 3 Who Created ImageNet ?

- 4 What is ImageNet Large Scale Visual Recognition Challenge (ILSVRC)

- 5 Popular Deep Learning Models of ImageNet Challenge (ILSVRC) Competition History

- 5.1 1. AlexNet | ILSVRC Competition – 2012 (Winner) | Top-5 Error Rate – 15.3%

- 5.2 2. ZFNet | ILSVRC Competition – 2013 (Winner) | Top-5 Error Rate – 11.2%

- 5.3 3. Inception V1 (GoogLeNet) | ILSVRC Competition – 2014 (Winner) | Top-5 Error Rate – 6.67%

- 5.4 4. VGG-16 | ILSVRC Competition – 2014 (Runners-Up) | Top-5 Error Rate – 7.3%

- 5.5 5. ResNet | ILSVRC Competition – 2015 (Winner) | Top-5 Error Rate – 3.57%

- 5.6 6. ResNeXt-10 | ILSVRC Competition – 2016 (Runners Up) | Top-5 Error Rate – 4.1%

- 5.7 7. PNASNet-5 | ILSVRC Competition – 2018 (Winner) | Top-5 Error Rate – 3.8%

- 6 (Bonus)

- 7 Conclusion

Introduction

Image Classification is now considered a fairly solved problem thanks to state-of-art deep learning models. Nowadays the error rate of these image classification models hover around 3%, but a decade back the error rates of the best models were around 25%. Of course, researchers played an important role in the emergence of deep learning, but the story cannot be complete without the ImageNet visual database and its annual contest with more than millions of labeled images that helped the researcher to train and benchmark their models. In this article, we will take a look at the popular deep learning models of ImageNet challenge competition history also known as ImageNet Large Scale Visual Recognition Challenge or ILSVRC.

What is ImageNet ?

ImageNet is a visual Dataset that contains more than 15 million of labeled high-resolution images covering almost 22,000 categories. ImageNet Dataset is of high quality and that’s one of the reasons it is highly popular among researchers to test their image classification model on this dataset.

Who Created ImageNet ?

The idea of the ImageNet visual database was conceived by Fei-Fei Li, a Professor of Computer Science at Stanford University in 2006. In those days, she was working in the field of medical imaging and faced issues in designing machine learning models due to a lack of quality images.

Annoyed with the issues of lack of quality image dataset for training ML models and inspired by the WordNet hierarchy, Fei-Fei Li along with her team members started a project known as ImageNet that was aimed to build an improved dataset of images.

It was an outstanding step by her considering other researchers were trying to improve ML algorithms, Fei-Fei Li decided to improve the dataset for better training of ML models and launched the database in 2009.

What is ImageNet Large Scale Visual Recognition Challenge (ILSVRC)

Building upon this idea of training image classification models on ImageNet Dataset, in 2010 annual image classification competition was launched known as ImageNet Large Scale Visual Recognition Challenge or ILSVRC.

ILSVRC uses the smaller portion of the ImageNet consisting of only 1000 categories. The total count of training images is 1.3 million, accompanied by 50,000 validation images, and 1,00,000 testing images.

The models participating in this competition have to perform object detection and image classification tasks at large scale and models that achieve the minimal top-1 and top-5 error rates (top-5 error rate would be the percent of images where the correct label is not one of the model’s five most likely labels) are announced as the winner.

Popular Deep Learning Models of ImageNet Challenge (ILSVRC) Competition History

In this section, we’ll go through the deep learning models that won in the Imagenet Challenge ILSVRC competition history. We’ll also see what all advantages they provide and where they need to improve.

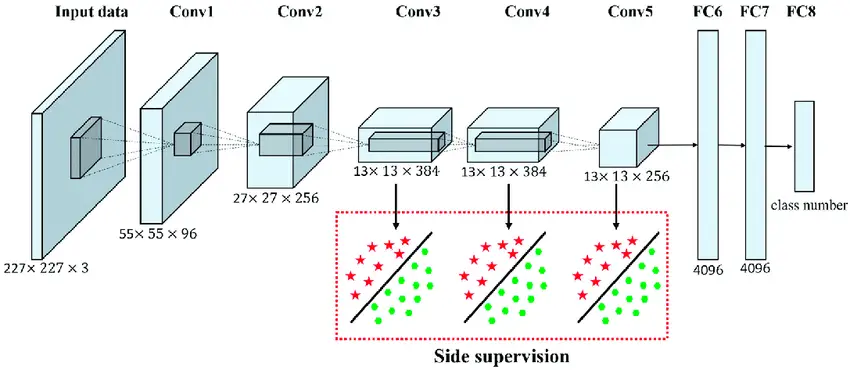

1. AlexNet | ILSVRC Competition – 2012 (Winner) | Top-5 Error Rate – 15.3%

AlexNet was a Convolutional Neural Network designed by Alex Krizhevsky’s team that leveraged GPU training for better efficiency. Prior to 2012, the image classification model error rate was around 25% but AlexNet shockingly surpassed that error rate with 15.3 in the 2012 ImageNet challenge.

AlexNet is often regarded as the pioneer of the convolutional neural network and starting point of the Deep Learning boom. AlexNet has definitely found cult status in the ImageNet challenge (ILSVRC) competition history.

AlexNet contains 8 layers where 5 are convolutional layers and 3 fully-connected layers. It used the ReLU activation function to add nonlinearity and improve the convergence rate and also leveraged multiple GPUs for faster training. Since AlexNet had 60 million parameters making it susceptible to overfitting, the creators adopted method of data augmentation and dropouts to avoid overfishing. Moreover, AlexNet made use of overlapping pooling to reduce the error.

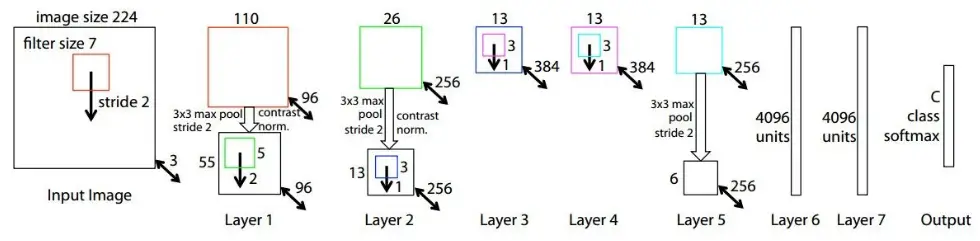

2. ZFNet | ILSVRC Competition – 2013 (Winner) | Top-5 Error Rate – 11.2%

ZFNet entered ImageNet competition in 2013, the next year after AlexNet had won the competition. A professor at NYU named Dr. Rob Fergus along with his Ph.D. student Dr. Matthew D. Zeiler designed this new deep neural network and named it after the initials of their surnames.

It surpassed the results of AlexNet with an 11.2% error rate and was the winner of the 2013 ImageNet challenge.

ZFNet is considered as an extended version of AlexNet with some modifications to filter size to achieve better accuracy. ZFNet used 7×7 sized filters, on the other hand, AlexNet used 11×11 filters. The idea for using smaller filters in the convolutional layer was to avoid the loss of pixel information.

Although ZFNet was able to improve the way of extracting pixel information, it couldn’t decrease the computational cost that was involved in going deeper into the network.

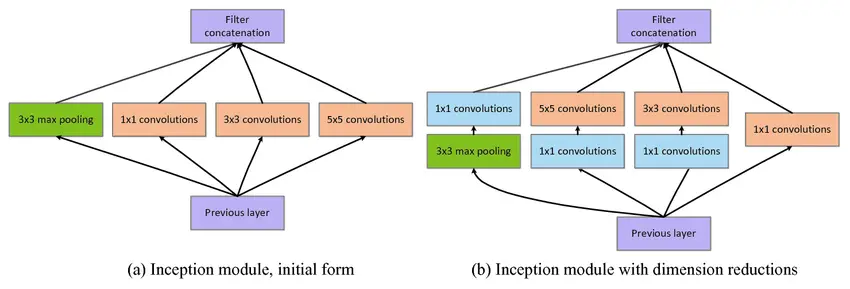

3. Inception V1 (GoogLeNet) | ILSVRC Competition – 2014 (Winner) | Top-5 Error Rate – 6.67%

Inception was Google’s developed image classification deep learning model that was the winner of the 2015 ImageNet challenge with an error rate of 6.67%. The v1 stands for 1st version and later there were further versions v2, v3, etc. It is also popularly known as GoogLeNet.

The idea behind the architecture was to design a really deep network with 22 layers that was something not seen in its predecessors like ZFNet and AlexNet. For doing this the team did some tricks by choosing 1×1 convolutional along with ReLU to increase computational efficiency by reducing the dimensions and number of operations.

4. VGG-16 | ILSVRC Competition – 2014 (Runners-Up) | Top-5 Error Rate – 7.3%

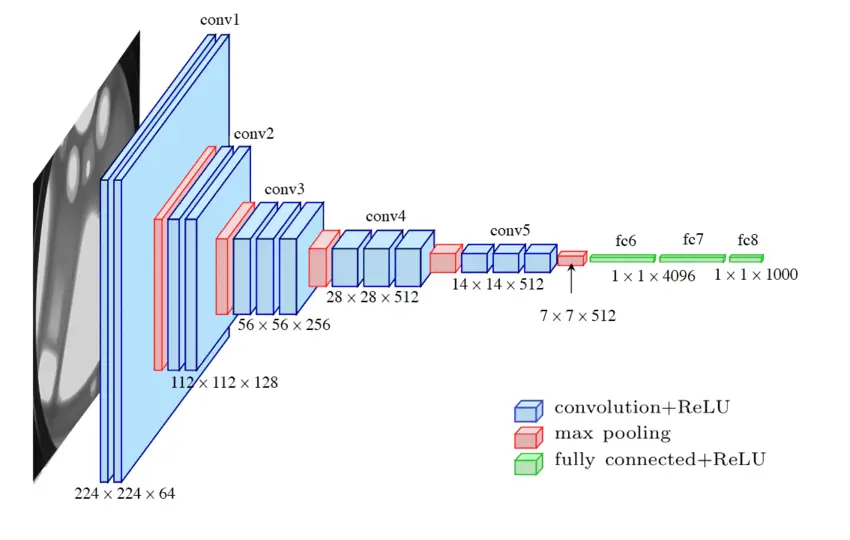

VGG-16 is a Convolutional Neural Network model that was proposed by the researchers of the University of Oxford. VGG-16 had secured 1st runners up position in the 2014 ImageNet competition with an error rate of 7.3%. Despite not winning the competition, VGG-16 architecture was appreciated and went on to become one of the most popular image classification models.

In VGG-16 the main characteristic is that, instead of using large-sized filters like AlexNet and ZFnet, it uses several 3×3 kernel-sized filters consecutively. The hidden layers of the network leverage ReLU activation functions. VGG-16 is however very slow to train and the network weights, when saved on disk, occupy a large space.

5. ResNet | ILSVRC Competition – 2015 (Winner) | Top-5 Error Rate – 3.57%

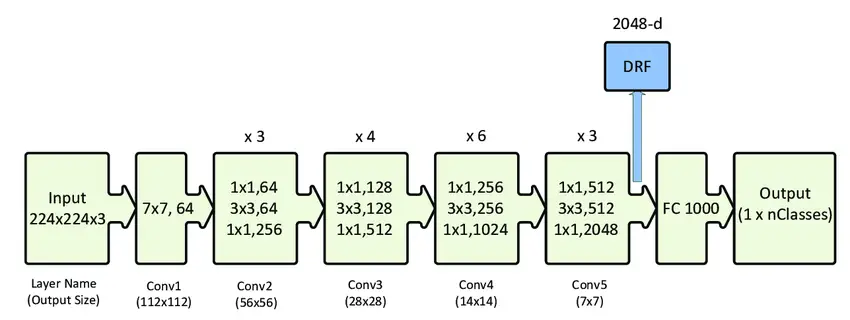

ResNet (Residual Network) was created by the Microsoft Research team and won the 2015 ImageNet competition with and the error rate of 3.57%.

The idea of using numerous hidden layers and extremely deep neural networks was implemented by a lot of models but then it was realized that such models were suffering from vanishing or exploding gradients problem. The Microsoft Research team came up with ResNet architecture to counter this problem.

ResNet uses residual blocks and skip connections for increasing the count of hidden layers to 152 without worrying about the vanishing gradient problem. Basically the network preserves the information that was obtained through learning in the form of residuals. It is done through an identity mapping weight function in which the output is equivalent to input.

6. ResNeXt-10 | ILSVRC Competition – 2016 (Runners Up) | Top-5 Error Rate – 4.1%

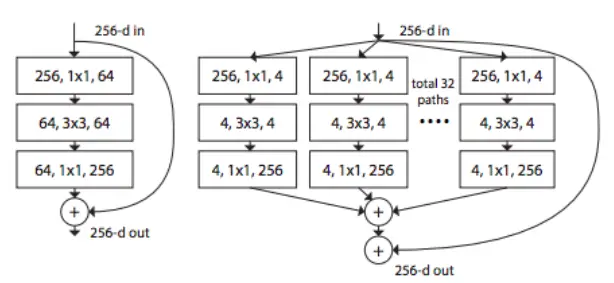

ResNeXt-10 was developed in the collaboration of the Researchers from UC San Diego and Facebook AI Research. ResNeXt-10 was inspired by the previous models such as ResNet, VGG, and also Inception. It was a Runners Up in 2016 ImageNet challenge but still it became a popular model.

ResNeXt implementation idea was to stack the blocks and then use the ResNet approach of residual blocks. Here the hyper-parameters such as width and filters were also shared.

Apart from this, ResNeXt has a feature known as Cardinality, this feature refers to the size of the set of transformations. The architecture of ResNeXt uses 32 topology blocks which simply suggests that the cardinality is 32. As there is a uniformity in the topology, requirements of parameters are less and also more number of layers can be added to the structure of the network.

7. PNASNet-5 | ILSVRC Competition – 2018 (Winner) | Top-5 Error Rate – 3.8%

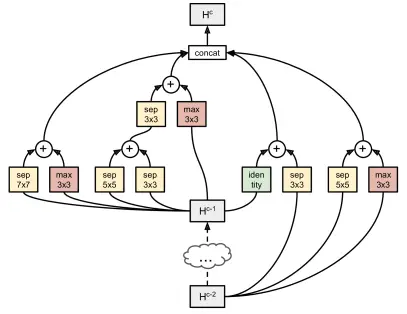

PNASNet stands for Progressive Neural Architecture Search. PNASNet-5 implements a new way of learning the structure of Convolutional Neural Networks (CNN) by using the power of reinforcement learning and evolutionary algorithms. The approach is to perform optimization using a sequential model. PNASNet-5 has been performing really well with 5 times more efficiency than the previous models and 8 times faster as well.

PNASNet-5 was the winner of the 2018 ImageNet challenge with an error rate of 3.8%.

This progressive structure helps in training the model faster. With such a structure much more in-depth search of models can be performed.

(Bonus)

Human Beings | Top-5 Error Rate – 5.1%

We have seen that the deep learning models are touching a 3% error rate on image classification in the history of ImageNet competition. So where do humans stand with this task on ImageNet database?

To find this answer, in 2017, research was conducted that compared the state-of-the-art neural networks and a human’s performance on ImageNet Dataset. In the research, it was found that the neural network was able to achieve a top-5 error rate of 3.57% whereas humans performance was restricted to a top-5 error rate of 5.1%. This result clearly suggests that deep neural networks are able to perform better in recognizing objects and classifying objects than humans.

- Also Read – 14 Computer Vision Applications Beginners Should Know

- Also Read – 13 Cool Computer Vision GitHub Projects To Inspire You

Conclusion

We have reached the end of another engrossing article, here in this article we learned about popular deep learning image classification models in the ImageNet challenge (ILSVRC) competition history. The article also consisted of information about what ImageNet is and how it was built and how the ILSVRC competition is organized around ImageNet each year. With each model, we learned about its advantages and drawbacks as well and how the successive model has looked to improve the preceding model’s lacking points.

No hay comentarios:

Publicar un comentario